Testkit Mastery, Part 1: Overcoming Testing Challenges for Complex Libraries

Testing complex libraries in non-production environments isn’t always straightforward. Whether you’re working with systems like state management libraries, custom hooks, or data-fetching utilities, replicating their behavior outside of production often feels like solving a frustrating puzzle. Without the full application context, traditional tools fall short, turning testing into a complicated, time-consuming process.

This series explores how we tackled these challenges by designing a flexible and developer-friendly testkit to simplify testing for Vulcan, our internal global state management library. While this is our story, the strategies and frameworks we applied can help any library maintainer improve testing for their own sophisticated systems.

The series will be released during the following weeks. Here’s what we’ll cover across it:

- Part 1: Overcoming Testing Challenges for Complex Libraries

We’ll examine the challenges of testing complex libraries and share the decisions that shaped our strategy for building the testkit. - Part 2: Designing a Developer-Friendly Testkit

We’ll walk through prototyping the testkit API, defining the key requirements for usability, flexibility, and TypeScript integration. - Part 3: Building the Core Structure of a Flexible and Reliable Testkit

We’ll discuss how we implemented the core structure of the testkit, balancing flexibility and consistency to meet real-world testing needs. - Part 4: Design for Easy Integration into Testing Environments

We’ll demonstrate how to seamlessly integrate the testkit into environments like Vitest and Storybook with minimal setup. - Part 5: Key Takeaways and Lessons Learned for Building Better Testkits

We’ll reflect on key takeaways, lessons learned, and practical insights for building better testkits.

By the end of this series, you’ll have actionable insights for building testkits that make testing easier, faster, and more reliable—even for the most complex libraries. Let’s dive in!

Understanding the Problem

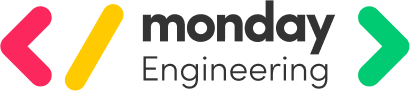

To understand the problem of testing complex libraries, let’s take an example from our global state management library, named Vulcan. Vulcan acts as a caching layer, ensuring data is loaded only once and shared across microfrontends to prevent redundant requests. Designed for our microfrontend architecture, Vulcan ensures that data is accessible across different microfrontends without redundant network requests (similar to React Query). In production, a platform-level host exposes Vulcan as a global instance, making it available throughout the system.

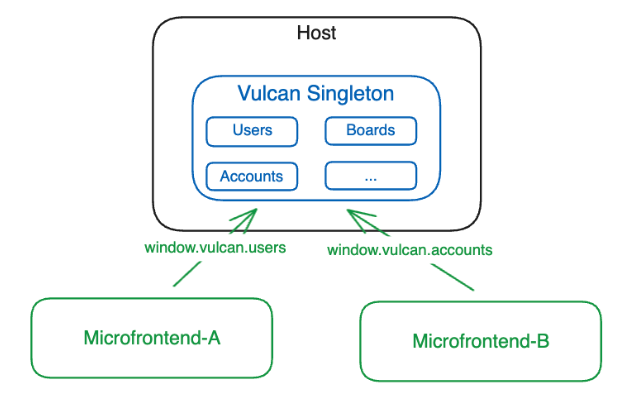

However, in testing environments like Vitest and Storybook, this host doesn’t exist. Without it, Vulcan isn’t accessible, which means that any tests or stories relying on Vulcan’s global state would break. This is a common issue with platform-level libraries that depend on a specific production setup to operate correctly. These environments just aren’t set up to handle Vulcan’s centralized state management out of the box.

This posed a clear challenge: we needed a way to mock Vulcan’s behavior in testing environments, allowing tests and stories to run independently of the production setup. But simply mocking Vulcan wasn’t enough; our solution needed to be flexible, easy to use, and maintain the same API as the real Vulcan to keep the developer experience consistent.

First Step: Mapping Out Common Use Cases

To ensure the testkit would be both intuitive and useful, we first needed to understand how Vulcan was being used across the platform. Vulcan’s API is designed around slices, each representing a different domain (e.g. user, board). Within each slice are queries that retrieve specific data, which developers use to manage component state.

Inspired by React Query, these queries always return metadata that developers can rely on to control the component’s behavior—properties like isLoading, isError, and data. For example, a typical usage of Vulcan in a React component might look like this:

import vulcan from 'mondaycom/vulcan';

export const UserName = ({ userId }) => {

const { data, isLoading } = vulcan.useQuery(vulcan.user.getById(userId));

if (isLoading) {

return <div>Loading user data...</div>;

}

return <div>User name: {data.name}</div>;

};In this example, the component initially displays a loading state while waiting for the data, then displays the user’s name once it’s available. This pattern is core to Vulcan’s design, allowing developers to work with live updates on query state as it changes over time.

Understanding these usage patterns guided our priorities for the testkit, as we wanted to preserve Vulcan’s production behavior as closely as possible. This approach would help maintain a consistent developer experience between production and testing environments.

Defining the Mocking Strategy

With a clear understanding of how Vulcan was being used, the next step was to decide on the best way to mock its functionality in tests. To keep the developer experience as close as possible to production, we wanted the testkit to simulate Vulcan’s behavior in a way that matched its real-world usage.

This led us to consider two primary options:

- Mocking the API Functions Directly: This approach would involve replacing Vulcan’s methods (like

getByIdabove) with mock implementations. Mocking the API directly would allow us to simulate the real API without making actual HTTP requests, giving developers control over returned data and query states. - Mocking the Underlying HTTP Layer: Alternatively, we could intercept the HTTP requests made by Vulcan and provide mock responses. This approach would mean Vulcan itself behaves as if it’s making real network requests, but the responses are controlled by the testkit.

After careful consideration, we chose to mock Vulcan’s API directly for two main reasons:

- Encapsulation of Implementation Details: Mocking the API functions keeps the abstraction intact. Developers using the testkit don’t need to know or care about the HTTP routes used by Vulcan, preserving the separation of concerns.

- Handling Additional Complexities: Some of Vulcan’s API calls rely on more than just HTTP, such as local storage or other data sources. Mocking only the HTTP layer wouldn’t be enough to simulate this behavior accurately.

With the core problem defined and the decision to mock Vulcan’s API functions directly, we established a strong foundation for our testkit. By carefully considering Vulcan’s usage patterns and weighing different mocking strategies, we could approach the testkit design with clear priorities and direction.

In the next part, we’ll dive into the process of prototyping the testkit’s API with real tests. This approach helped us define the essential features needed to simulate Vulcan’s behavior effectively, paving the way for a testkit that is both powerful and developer-friendly.