The event sourcing architecture we didn’t build

Setting the scene: The board accumulation problem

When we first interviewed enterprise admins to understand their biggest pain points, one need kept surfacing as both important and unsatisfied: “Organize and clean the account.” Archive old boards, delete what’s no longer needed, and make sure every board has an owner.

Simple enough, right? Not exactly.

To understand why, consider how monday.com scales in enterprise accounts. We’re not talking about a single admin managing a tidy workspace. Our largest accounts can have tens of thousands of employees with many admins distributed across the organisation – each responsible for different teams and workspaces.

At this scale, boards accumulate. A board – the fundamental unit of work in monday.com – can represent anything from a sprint backlog to a marketing campaign. Enterprise accounts build tens of thousands of them over the years. Project boards from completed initiatives linger. Test boards multiply. That Q3 campaign board from 2019? Still there.

The pain point was clear: there had been no unified view. Information about what needed cleanup was scattered across multiple screens, with no clear indication of what was inactive, what was orphaned, or what should go.

The Data Lifecycle team set out to fix this. Let admins configure retention policies, surface boards pending archival, and automatically archive those without activity. We got it to beta in one quarter.

What we shipped for admins

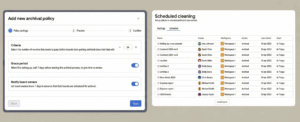

The feature is an admin dashboard with two core capabilities:

First, retention policy configuration. Admins set rules like “archive boards with no activity for 6 months” at the account level. Different accounts have different thresholds depending on their compliance requirements and storage needs.

Second, a view of upcoming archivals. Admins can see which boards are scheduled for archival in the coming week, along with owner names, workspace locations, and last activity dates. They can protect specific boards from archival if the content is still needed, even if it hasn’t been touched recently.

The system then runs automatically: boards that cross the inactivity threshold are archived without manual intervention, while admins retain visibility and control over exceptions.

That’s the user-facing feature. The rest of this post is about what it took to build it – specifically, how we track “last activity” across millions of boards reliably enough to make those retention decisions.

But the path there required some interesting tradeoffs.

The architecture we didn’t build

Before diving into what we shipped, let me explain what we could have built – because understanding this makes the tradeoffs clearer.

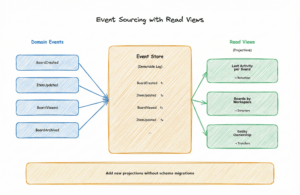

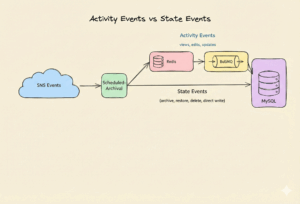

When you’re building activity tracking, there’s a well-established pattern: Event Sourcing with CQRS (Command Query Responsibility Segregation – separating how you write data from how you read it).

The core idea: every change becomes an immutable event appended to an event store. You don’t store the current state directly; you rebuild it by replaying events. Sounds inefficient? Here’s the trick: you create read views (also called: projections) that pre-compute the data however you need it.

For board activity, you’d capture events like BoardCreated, ItemUpdated, and BoardViewed. From this stream, you’d build projections:

- “Last activity per board” for retention policies

- “Boards by workspace” for content directory

- “Entity ownership” for transfer workflows

Want to track document activity later? Add event types, create a projection. No schema migrations, no breaking existing views.

Why this was tempting

Here’s why this was particularly appealing for our team. The Data Lifecycle team builds multiple tools for enterprise admins: scheduled archival (this feature), scheduled deletion (coming soon), cross-account migration, and a content directory showing all entities with available actions.

Many of these share a common need: find entity IDs matching filters, then fetch metadata. Which boards haven’t been active in 6 months? Which items belong to a deleted user? Which documents exist in a workspace being migrated?

Event sourcing would give us a domain store we controlled, a unified view of entity lifecycles built from events we consume. Each new feature would query projections rather than requiring fresh integrations with core systems.

So why didn’t we build it?

Why we went for a pragmatic solution

Two main reasons.

We don’t own the domain entities. At monday.com, different teams own different core systems. We help admins manage the ecosystem. We don’t own boards, items, or the collaboration infrastructure. Building an event-sourcing layer would require coordinating architectural changes across multiple teams. That’s substantial.

We had hypotheses, not validation. Our research told us admins wanted to organize and clean their accounts. But how would they actually use retention policies? Six-month thresholds? Per-workspace rules? We didn’t have a clear idea. Investing multiple quarters into infrastructure before validating the feature? Risky.

So we asked: what’s the minimum we need to ship and learn?

The answer: focus on boards (our largest and most numerous entity), use existing event infrastructure, and build a standalone service.

What we actually built

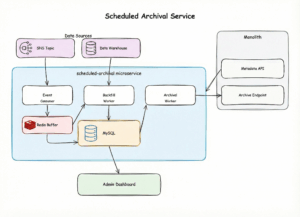

We built a microservice called Scheduled-Archival that tracks board activity independently. No coordination with other teams required. We could iterate fast.

The data model

The main data model is simple – a single table:

Scheduled_archival – the core tracking: `board_id`, `last_active_timestamp`, `archival_status`.

For display info – owner name, workspace name, profile URLs – we fetch that at runtime from the monolith. Admins need to see whose board is pending archival and where it lives before deciding to protect it, but we don’t need to store that ourselves. The monolith is the source of truth for metadata; we just track activity.

The backfill problem

We needed two data sources running in parallel:

Real-time events from the board-events SNS topic (our pub/sub infrastructure for board events) – when someone views a board, creates an item, or updates a column.

Historical data from our data warehouse. The users_boards_daily table holds granular activity records – billions of rows, processed in large batches.

Running both simultaneously created an obvious problem; both paths wrote to the same table. Imagine this sequence:

- Live event arrives: “Board 123 was viewed 2 seconds ago”

- Consumer writes last_active = now() to MySQL

- Backfill query returns: “Board 123’s last activity was 6 months ago”

- Backfill worker writes last_active = 6 months ago to MySQL

We would have lost real user activity. The board would look inactive when it wasn’t.

The fix: idempotency checks on every write. Compare timestamps, only update if the incoming data is newer. The same input should get the same result, no matter how many times it runs.

The Redis buffer

Enterprise accounts generate thousands of board events per second during peak activity. Writing each one directly to MySQL would overwhelm the database – too many concurrent connections (connection pool exhaustion), too much contention for row locks (lock contention) – the usual suspects.

So we buffered through Redis:

SNS → scheduled-archival consumer → Redis Sorted Set → BullMQ Job (worker inside scheduled-archival ms) → MySQL

The consumer writes to a Redis sorted set keyed by board ID, with the timestamp as the score. BullMQ – a Redis-based job queue – periodically drains these entries and flushes them to MySQL in batches. It handles retries and scheduling out of the box, delivering high write throughput without hammering the database.

We chose the sorted set data structure for a reason; it would deduplicate events for the same board and keep the latest timestamp. If a board receives 50 events in a single flush interval, we only write the most recent timestamp. But that’s a bonus: the real win is turning 15k individual writes into manageable batches.

However, here’s a nuance: status changes bypass Redis entirely.

When a board is archived or restored, that event writes directly to MySQL. We didn’t use Redis persistence, so the buffer wasn’t durable. If it fails, queued events are lost.

For activity timestamps, losing an event is acceptable… the next one updates the value anyway. For board status? Losing an archive event would mean our view would be wrong. That’s corruption we can’t afford.

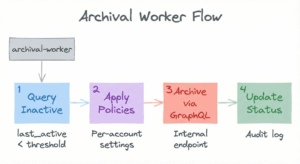

The archival worker

The payoff: a scheduled job that runs periodically.

- Query boards where last_active_timestamp exceeds the retention threshold

- Apply account-specific policies

- Exclude admin-protected boards

- Archive via internal monolith endpoint

- Update status and audit trail

What we traded off

This architecture got us to beta in one quarter. Very few infrastructure changes outside our team. Real enterprise usage data flowing in.

| Aspect | Event Sourcing | Our Approach |

| Entity coverage | Any entity type | Boards only |

| Infrastructure changes | Significant | Minimal |

| Cross-team coordination | Substantial | Minimal |

| Reusability for other features | High | Limited |

| Implementation time | ~ 9 months | 3 months |

Limitations of our pragmatic approach

- Single-entity focus: extending to items, documents, or automations requires new development, not just adding projections.

- Feature-specific data: scheduled deletion and content directory will need their own data sources.

- No historical replay: we can’t reconstruct past states from an event stream.

When shared infrastructure earns its cost

Scheduled archival is in general availability. Our immediate focus: extending with additional retention rules and building scheduled deletion.

Event sourcing remains compelling, especially as we see common patterns emerging across features. But we’re not there yet. The trigger would be clear: when multiple features need overlapping data about entities beyond boards – documents, dashboards, assets – that’s when a shared event store starts paying for itself. Right now, we’re building feature-specific solutions. When we find ourselves duplicating “find entities by account + filter by activity” logic for the third or fourth time, that would be the signal to invest in shared infrastructure.

For us, the lesson was straightforward: ship what you can validate, and let each feature teach you what the architecture should eventually become.