Coding with Cursor? Here’s Why You Still Need TDD

AI-assisted coding has become hard to ignore. Tools like Cursor, Codex, and Copilot are becoming increasingly integrated into developers’ daily workflows. They promise speed, creativity, and automation, and in many ways, they deliver on these promises. But like any powerful tool, they come with trade-offs.

When I first started using these tools, I fully embraced them. I leaned into “vibe coding,” relying on the AI to generate large chunks of logic without much structure. I stopped doing what had always worked for me in the past —TDD —and assumed that the new tools made those older practices obsolete. They didn’t.

At monday.com, I work on the CRM product, where we’re responsible for handling all the interactions our users have with their customers. As part of that, I had to build an SMTP email parser, a task that sounds perfect for AI. It’s not about system architecture or cutting-edge algorithms; it’s just clean, straightforward business logic. So I leaned on AI heavily.

But what seemed like an ideal fit quickly turned into a mess.

This post outlines how I returned to TDD, not by rejecting AI, but by integrating it. What I’ve found is that combining the discipline of TDD with the generative power of AI creates a workflow that’s faster, more reliable, and much easier to reason about.

Main Problem with AI

Before diving into the approach itself, it’s important to understand what it’s trying to solve. Based on my experience, there are three core issues that arise when relying too heavily on AI for software development:

1. Almost Right Isn’t Good Enough

In many cases, debugging AI-generated code ends up being more time-consuming than writing it manually. Refactoring becomes painful, and understanding the logic, especially when it’s domain-specific, can be even harder. The further you get from your own logic, the harder it is to trust the code.

AI can often generate “almost correct” code. It gets close, sometimes impressively so, but rarely nails the full intent. The output often lacks adherence to clean code principles, or it subtly misinterprets the requirements. It might function, but not in a way that’s easy to maintain or reason about.

AI is very good at getting you to 80%. But when you give it too much control, getting from 80% to 100% often becomes slower and more frustrating than doing the entire thing yourself.

2. The Process Feels Slow and Disconnected

One of the more subtle problems is that the workflow becomes disjointed. You wait. You stare at a loading spinner. How long will a response take? In the meantime, you’re either doing nothing or trying to context switch into another task.

Some people manage this by multitasking, juggling several tickets in parallel. Personally, I’ve found that context switching like that makes me less productive. I lose focus, get mentally scattered, and end up bouncing between half-finished thoughts. The feedback loop is slow and vague, gradually chipping away at your momentum.

3. You Lose Touch With Your Codebase

This one was the most unexpected for me. When you rely too much on AI, you gradually stop being the author of your code; you become the reviewer. And that distance matters.

When you review someone else’s code (or AI’s), you don’t see the decisions, the discarded paths, the tradeoffs. You see only the outcome. That works fine for simple utilities, but when you’re building more complex systems, anything with nuanced logic or deep business context, that separation becomes dangerous.

You stop understanding your own system. You stop seeing the edges where things can break. And when they do, you’re not well-equipped to fix them.

Why TDD?

These challenges led me to revisit practices I had gradually abandoned, specifically, Test-Driven Development. What I found is that TDD isn’t just compatible with AI-assisted development, it’s one of the most effective ways to make AI useful.

Before explaining how I integrate TDD into my current workflow, it’s worth reviewing what TDD actually is and why it still matters.

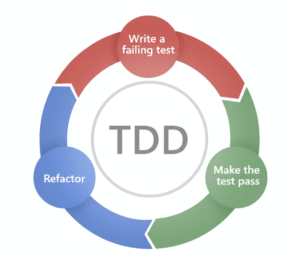

Test-Driven Development (TDD) is a software development methodology where tests are written before the code itself. The process follows a simple cycle:

- Write a failing test.

- Write the minimal code required to make it pass.

- Refactor the code for clarity and maintainability.

- Repeat.

This cycle may seem rigid at first, but it introduces three key benefits that are especially relevant when working with AI:

- Clarity of intent: Writing a test forces you to define what you want to build before thinking about how to build it. You avoid overengineering, reduce ambiguity, and stay grounded in user requirements.

- Confidence in behavior: Since each piece of functionality is backed by a test, you can move faster without constantly second-guessing whether your changes break something. Edge cases are codified, not assumed.

- Small, controlled steps: TDD encourages working in tight feedback loops. Every cycle becomes a checkpoint: write, verify, and improve. This rhythm reduces the likelihood of bugs, keeps the codebase clean, and makes the system easier to reason about as it grows.

TDD makes the AI pain go away

In short, TDD creates structure, and when you’re working with generative tools that tend to produce large, imprecise outputs, structure is exactly what’s missing.

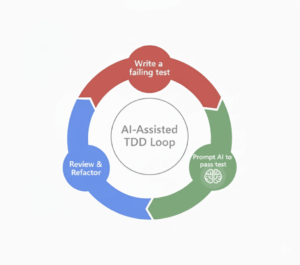

Let’s dive into the new loop I’m suggesting, one that’s working for me in my daily work. Previously, the TDD cycle was simple: Write a test → Write the code → Refactor. Now, we can leverage AI within that loop to make the process faster and more effective.

- Start with the test – No vibe coding here. Just as before, the test should be concise and focused, testing exactly one thing. This part of the workflow hasn’t changed, and it shouldn’t. You can use autocomplete, sure, but AI offers limited value here. You know your domain; writing the test should feel easy.

- Prompt the AI with context – This is where AI starts to shine. Once the test is written, use it as part of your prompt. Give the AI a clear structure, a defined goal, and a mechanism for self-evaluation. You’re no longer saying “build me a feature.” You’re saying:

Here’s the test. Write code that makes it pass. This clarity improves both speed and accuracy. - Review and refactor – When the AI finishes, you return to an active role, ask yourself: Is the code clean? Is it readable? Does it need refactoring?

If so, refactor manually or with the help of AI. This is an excellent opportunity to “teach” the AI your preferences by guiding it through your standards.

This iterative refinement builds momentum and raises quality.

Instead of prompting the AI with vague or large-scale instructions, such as “build me an SMTP email parser”. I use the process to help me create a better experience and output. The result is a tighter feedback loop, better control, and, most importantly, a stronger understanding of the codebase. I’m not just reviewing code that was generated for me; I’m designing the system through tests and utilizing AI to assist with implementation details. Now, the AI can also learn from the process by being instructed to create cursor rules for the things you corrected.

The Takeaway

The promise of AI is speed and scale, but without structure, you trade clarity for chaos. TDD brings that structure back.

When you combine a test-first mindset with smart AI prompting, you don’t just write code, you build systems you can trust. And in a world of accelerating automation, trust might just be your biggest advantage.

In the age of AI, TDD isn’t legacy; it’s the way to make AI work for you.